Regression Analysis

Regression Analysis

Regression analysis is the technique which is widely used for prediction and forecasting. It is basically used to know that dependent variable is related to which independent variable. Linear regression is used to estimate the relationships between variables by fitting an equation to given data. Linear Regression is used to predict the value of y which is dependent on x (explanatory variable or independent). If we consider one independent variable it is referred to as Linear Regression. While if we consider more than one independent variable it is referred to as Multiple Linear Regression models.

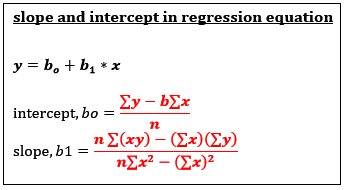

The equation of Linear Regression is

With a unit increase in Xi there is βi unit increase/decrease in Y

Hypothesis test of ModelNull Hypothesis: Hoi: Model is not significant.

V/s Alternative Hypothesis: H1i: Model is significant. I use F test statistic to test this.

If p-value < alpha, I reject Ho at 5% level of significance otherwise I fail to reject it.

Hypothesis test of slopeNull Hypothesis: Hoi: βi=0, βi is not significant, i.e. Xi should be included in the model.

V/s Alternative Hypothesis: H1i: βi≠0, βi is significant, i.e. Xi should not be included in the model.

If p-value < alpha, I reject Hoi at 5% level of significance otherwise I fail to reject it.

The confidence interval of SlopeThe confidence interval and Prediction IntervalA confidence interval for an average expected value of y, E(y), for a given x* is given by:

The prediction interval for the average expected value of y, E(y), for a given x* is given by:

Adjusted R2 and Coefficient of Determination (R2)

Adjusted R^2 gives the percentage of variation in dependent variable which is explained by significant independent variables. Adjusted R^2 increases with the addition of significant variables. The formula for adjusted R^2 is given by:

The coefficient of determination, R^2 gives the percentage of variation in dependent variable which is explained by all independent variables in the model. The formula for R^2 is given by:

Step-wise Regression Analysis

Step-wise regression is a semi-automated process of building a model by successively adding or removing variables based solely on the t-statistics of their estimated coefficients. The adjusted R-squared compares the explanatory power of regression models that contain different numbers of predictors. The adjusted R-squared increases only if the new term improves the model more than would be expected by chance. It decreases when a predictor improves the model by less than expected by chance. It is always lower than the R-squared.

What is Multi-co-linearity?

Co-linearity (or multi-co-linearity) is the undesirable situation where the correlations among the independent variables are strong. Multi-co-linearity increases the standard errors of the coefficients. Increased standard errors mean that coefficients for some independent variables may be found not to be significantly different from 0, whereas without multi-co-linearity and with lower standard errors, these same coefficients might have been found to be significant. Thus, it makes some variables statistically insignificant while they should be otherwise significant.

Variance inflation factors (VIF) measure how much the variance of the estimated coefficients is increased over the case of no correlation among the independent variables. If no two independent variables are correlated, then all the VIF's will be 1. If VIF for one of the variables is greater than 3.3, there is Co-linearity associated with that variable.

What are the assumptions of Regression Analysis?

The regression model is easy to use and apply but the major limitation is that regression will give correct results only if dependent and independent variables are correlated to each other.

- One of the assumptions is regression model should not have multi-co-linearity, which means independent variables will not be correlated to each other

- Other assumptions involve there should be no auto-correlation, that is error terms in prediction must not be correlated with each other.

- The variance of error terms must be constant, that there should not be hetero-scedasticity.

- Residuals should be normally distributed.

All these assumptions may not be valid on real-world data which makes it difficult for managers to make a prediction as per regression.

Login | Sign Up

Login | Sign Up

_CI.PNG)

_PI.PNG)